This is an archive of a Twitter thread I did while reading the eSafety Strategy 2022-25, a document released by Australia’s eSafety Commissioner. It is part of long running series of posts called Too Long; Justin Read.

I see eSafety has released its eSafety Strategy 2022-25. ping @stilgherrian @efa_oz @DRWaus @samfloreani @rohan_p @NewtonMark and other fans of internet regulation.

I shall once again take a break from paid work I want to avoid so I can read this and tweet the highlights*. That’s right, it’s time for another edition of Too Long; Justin Read!

For those new to the idea: Too Long; Justin Read is where I read boring documents so you don’t have to. I summarise the highlights as I see them, with liberal quantities of snark, memes, and praise as I deem appropriate.

When I remember, I put the threads up on my website so you can find them later: https://www.eigenmagic.com/tag/tljr/

Let’s dive into Australia’s eSafety Strategy 2022-25! Read along with me by grabbing the PDF from here: https://www.esafety.gov.au/about-us/who-we-are/strategy

eSafety Commissioner’s foreword

We start with the Commissioner’s foreword. The eSafety Commissioner has broad powers that can be exercised with little oversight, so how they view the world is kinda important.

“Over our short history, we’ve gone from the most important government regulator you’ve never heard of to one of the most important government regulators we hope you’ll hear much more from.” I’m sure the ACCC, ASIC, ATO, AHRC and others will be glad to learn this.

“all forms of online abuse reported to eSafety have grown considerably since before the COVID-19 pandemic” Well yes. Laws were passed that require people to do that, and eSafety did a bunch of advertising to encourage people to do more reporting.

“These elevated levels of abuse have become our new normal.” I assume they mean the things that are being reported more, not the reports themselves. Noticing things more doesn’t mean they are actually happening more. As per this famous chart of incidence of left-handedness.

“This is playing out online in numerous ways, as is the tension between a range of fundamental rights.” The tension between rights is, of course, unique to the Internet so we need not concern ourselves with a study of history.

“These include where tech companies scan for known child sexual abuse material on their services: is an adult’s right to privacy superior to the rights of a child victim?” That’s a pretty offensive trivialisation of serious issues.

It’d be nice to have a nuanced conversation with an Australian regulator about technology one day. Apparently that day is not today.

“Or where an online service attempts to balance freedom of expression through protections against hate speech and extremism” It’s quite handy that speech only happens online and nowhere else, because that does simplify matters.

“We utilise powers under the Act assertively but judiciously, pairing our action with the imperatives of fairness and proportionality.” A noble goal, to be sure. The Australian people will enjoy verifying that this is indeed happening.

“At eSafety, we approach our work through three lenses – prevention, protection, and proactive change. All this work is underpinned by the scaling impact and power of strategic partnerships.” Now that’s something I’d like to dig into a bit more.

The alliteration of 3 Ps is a nice rhetorical technique. But what exactly is getting prevented? And the protection is for whom, from what? And what does “proactive change” mean? Let us look for this as we read.

Since this is “underpinned by the scaling impact and power of strategic partnerships” we probably want to know who these partnerships are with.

eSafety makes much of its Safety by Design brand. I’ve made criticisms its approach elsewhere so I won’t repeat them here other than to say: Safety for whom, from what?

“Since we know the internet will never be free from malice, conflict, and human error, we need to be smart about how we create the online world of the future.”

“That means making sure safety and governance are built into new technologies, so we can prevent and remediate any harm they might cause in the wrong hands.” Indeed. And that goes for laws, regulators, and governments as well.

“Over time, every Australian parent should be able to feel confident that as their children enjoy the wonders of the internet they are protected from its dangers.” Adults also deserve to be protected from danger. And sometimes a child’s parent *is* the danger.

eSafety started life as the Children’s eSafety Commissioner and unfortunately it has failed to outgrow that narrow perspective in many ways.

It also struggles with the idea that children are not a homogeneous group. Toddlers, tweens, and teenagers are all different. But they are also people, not a separate species that is inferior to adults. They are far from alone there, alas.

Personally I find it alarming and potentially dangerous whenever someone takes an overly paternalistic approach to children. Children are not pets nor are they property.

Let us move on to the “role of the eSafety Commissioner” section.

The role of the eSafety Commissioner

“The eSafety Commissioner (eSafety) is Australia’s independent regulator and educator for online safety – the first of its kind in the world.” The Commissioner likes to point this out at every opportunity. Reach and frequency is an important marketing concept.

“Online harms are activities that take place wholly or partially online that can damage an individual’s social, emotional, psychological, financial or even physical safety.” Pretty broad, huh. What would an “offline harm” be, do you think?

There’s a list of examples. The usual things you’d expect (CSAM, terrorism) but also “bullies, abuses, threatens, harasses, intimidates, or humiliates another person”. Centrelink apparently doesn’t count.

eSafety also includes online content that “is inappropriate and potentially damaging for children to see” which we all know means porn and not Parliament Question Time.

The Online Safety Act 2021 came into effect in January 2022. eSafety mostly uses it to tell Facebook to take stuff down when people complain. It’s mostly image-based abuse materials that get removed, is my understanding.

Too many men are abusive shitheads, and cops tend to ignore women who report it. Taking it down at least stops ongoing abuse of one type, but I’d still like more evidence of safe use of these powers.

Abusive shitheads are really good at manipulating systems of power to act against their victims. Like how TERFs abuse @TwitterSafety via brigading. Sometimes the abusive shitheads are coming from inside the house.

“The statutory functions set out under section 27 of the Online Safety Act determine the strategic priorities of the eSafety Commissioner.” I won’t list them all.

I will note that “Register mandatory industry codes requiring eight sectors of the digital industry to regulate harmful online content […] such as online pornography” is one of them, despite claims from eSafety that it wasn’t their priority.

No, I have no idea why certain people don’t trust you. Lying is such an ugly word.

The 3 Pillars of P begin! Let’s find out what Prevention, Protection, and Proactive and Systemic Change mean. Hangon, that’s 3 Ps and an S. Or is it 2Ps, a PC and an SC? Whatever I’m sure it’s fine.

“Prevention is critical.” Neat! “Through research, education and training programs, eSafety works to set a foundation to prevent online harm from happening.” Ah.

Education and training as a solution appeals to a certain kind of well-meaning technocrat. It has its place, but structural changes tend to have a bigger effect on safety, based on the history I’ve read.

I mean, sure, you can teach cyclists the most effective techniques for leaping out of the way of an SUV that tries to murder you, but physically separating cars from bikes tends to work, too.

Physical analogies don’t really work well when you’re dealing with speech and communications, however, so my snark is possibly a little unfair.

For example: I keep having to point out to regulators and tech people that building a separate Children’s Internet is a bad idea for a bunch of reasons, even if it were technically feasible, which it basically isn’t.

A Children’s Internet would require such a radical restructuring of society that we’d be better off attempting to build Fully Automated Luxury Gay Space Communism instead, which would have the same end effect.

“We base our approach on evidence. We have been building this body of evidence over time to track our progress and to make sure we have real, positive impact.” I’d love to see some of this evidence. So far all I’ve seen is evidence of activity, not effectiveness.

A bunch of us @efa_oz @DRWaus and others did push for measurement and reporting of effectiveness in eSafety’s enabling legislation. Government didn’t want that, so we got activity measures instead.

“What gets measured gets managed” per Drucker, so guess what eSafety will most likely do?

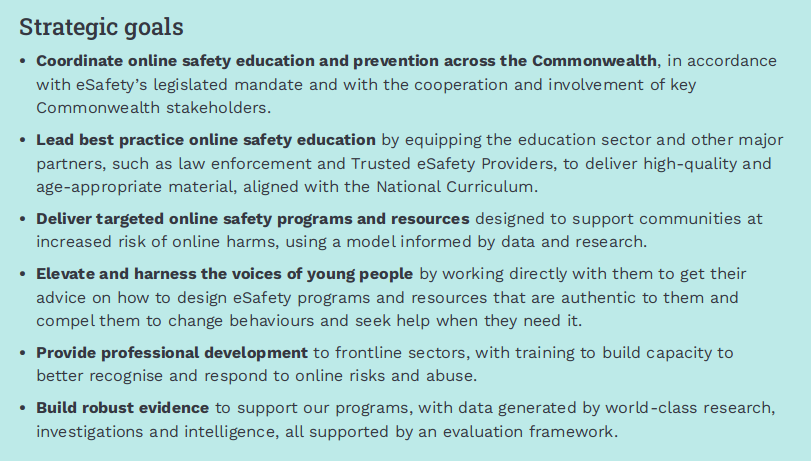

Here are the Strategic goals related to Prevention. Do you see any related to outcomes as distinct from activities? Let alone outcomes that are Specific, Measurable, Assignable, Realistic, and Time-bound.

None of this is useful for strategic planning. There are no win conditions defined here. No position and movement. Unsurprising, but still disappointing.

The Protection pillar is more of the same. Yawn.

The Protection and systemic change pillar is a bit more promising. It’s about “Reducing the risk of online harms within online services and platforms”. What the plan here, I wonder?

“Safer product design is fundamental to creating a more civil and less toxic online world much like the safety and design standards in traditional industries, most of which are guided by consistent international rules and norms.” Hooo boy.

“Civility” has a long and rich history of being used by the powerful to oppress those with legitimate grievances. How dare those uppity poors use such emotional language! Sure, we’re starving them to death, but calling me a capitalist pig is just as bad if not worse!”

Sure, sometimes people use language that others don’t like hearing. Sometimes it’s okay, sometimes it isn’t. The key is to look at the power relationships. “Punching up versus punching down” as they say in comedy circles.

When Mona Eltahawy says “How many rapists must we kill until men stop raping us?” and you focus on the first half of the sentence while ignoring the second half, you are siding with power over the oppressed in the name of civility.

[I am pausing to look up citations and check stuff as I go if you’re concerned about the odd delay here and there.]

And let us marvel for a moment at what “safer product design” even means in the context of human communications. Is eSafety going to require everyone use only Esperanto online in Australia? Create some kind of Académie Australien to safeguard the language?

Well no, but “eSafety has been seeking to shift the responsibility back onto the tech sector to assess platform risks and incorporate safety into development processes. We call this Safety by Design.” Okay, but again, safety for whom? From what?

I’m quite supportive of the idea of not needing to worry about getting poisoned when I buy a coffee from a cafe, and I hardly ever check if my bread has been adulterated with sawdust. But speech isn’t bread.

I can see product liability of some kind happening for commercial software for certain well-understood issues. This already true for certain software embedded into physical products. But speech? That gets really messy really fast.

“Safety should be considered as important as data privacy and security in global digital trust policy discussions and when setting norms for internet governance and online service provider regulation.” But what does ‘safety’ mean?

And, I mean, you could argue that safety is already considered as important as data privacy and security, i.e. not at all important. Look around.

The ‘goals’ are all nebulous activity items again, but “Focus on the future” includes the words ‘metaverse’ and ‘Web 3.0’ to make politicians think eSafety is modern and cool and hip and with it.

“Create regulatory partnerships with a range of domestic and international stakeholders, particularly overseas agencies with a similar remit and like-minded governments, to make sure global online safety regulation is coherent, proportionate and effective.”

Russia and the CCP have some exciting tools and techniques to share with us.

It’s unclear who the like-minded governments are, since Australia has no Bill of Rights and no right to free speech (in general; there’s a narrow implied right to political speech).

In this vein, we have the fourth P: Partnerships. “Safety online is an all-of-society responsibility that should know no borders or boundaries.” Yeah, see, imposing the standards of one country on all the others is what got us into this mess.

This is all just “we’ll work with everyone!” rhetoric because eSafety seems to believe it’s the centre of the universe and should be in charge of everything.

The end… or is it?

And that’s it. A much closer reading and more commentary than it probably warranted. It’s not a strategy, it’s a grab bag of platitudes, pompousness, and paternalism.

I can also do alliteration in threes, you see.

Anyway that took longer than I’d planned so I have to go do other things now. Here endeth the #tljr.

But wait, there’s more!

But wait! There’s more on the HTML of the page here: https://www.esafety.gov.au/about-us/who-we-are/strategy that isn’t covered in the Strategy.

eSafety has 6 lenses to add to its 4 pillars:

- Technology features

- International developments in policies and regulation

- Evolving harms

- Harm prevention initiatives

- New and emerging technology

- Opportunities.

Quite the grab bag, eh?

“livestreaming features, which may be end-to-end encrypted, are being exploited to facilitate on-demand child sexual abuse.” Stop trying to break encryption because you have no imagination.

“More commonly, a range of mainstream platforms and services allow adults and children to co-mingle, without age or identity verification.” Wait until eSafety learns about the existence of “outside”.

Did you know that you can go to church without having to show ID?

eSafety is joining everyone else in looking at AI/ML and algorithmic decision making because MPs are worried about what’s fashionable and budgets don’t allocate themselves.

Also if you want to be the über-regulator you can’t let anyone else get that budget or scope. If it has a computer near it, eSafety will be there!

“Anonymity and identity shielding allow an internet user to hide or disguise their identifying information online.” Anonymity and pseudonymity are also a requirement of the Privacy Act.

“The challenge is that real or perceived anonymity may contribute to a person’s willingness and ability to abuse others – and to do so without being stopped or punished.” The word ‘may’ is doing a lot of heavy lifting here, unsupported by evidence.

We see eSafety’s myopia here again as it focuses on “cryptocurrency and child sexual exploitation and abuse”. Just rebrand as the CSAM Commissioner already.

“the blockchain” is a separate thing, according to eSafety. The focus is still on CSAM, though.

“Tech regulation has implications for a range of digital rights, including freedom of expression, privacy, safety, dignity, equality, and anti-discrimination.” Finally noticed that I see.

“It is further complicated by authoritarian regimes increasingly using online safety regulation to pursue arbitrary or unlawful politically motivated content censorship, often under the pretext of national security.” If it quacks like a duck…

“Democratic governments have a responsibility to show how to govern tech platforms in a manner that both minimises harm and prioritises and reinforces core democratic principles and human rights.” Feel free to start any time you like.

Perhaps eSafety could provide further details on what it believes are “non-core democratic principles”?

“eSafety will work with international partners to make sure the regulation of online platforms is based on democratic principles and human rights” Why not start with the regulations we have here, such as the Online Safety Act? #tljr

“Perpetrators of family and domestic abuse increasingly use technology to coerce, control and harass their current or former partners.” Yeah, the tools and techniques are quite popular with cops and governments, too. #tljr

“The internet was created by adults for adults, but it is young people who inhabit the online world more fully and now shoulder the primary burden of online risks.” [citation needed] #tljr

In what way do young people “shoulder the primary burden of online risks”?

Young people use tech a lot

[scene missing]

Scan your face to read your homework assignment?

“eSafety has started to explore the range of these measures, at the Australian Government’s request, by developing a roadmap for a mandatory age verification regime for online pornography.” Ah yes, the thing you promised you wouldn’t do.

AusGov Certified Porn™ coming soon to an internet near you.

The Opportunities section is keen on ‘safety tech’. “We want to work to help build this capability” so eSafety will also become a VC fund now?

Now there’s “challenging business models”. “There has long been concern about the business model of capturing and monetising personal information to drive massive revenues for some big technology businesses. This is often referred to as ‘surveillance capitalism’.”

Facebook, Cambridge Analytica, YouTube/FTC consent decree. “there have been concerns raised more recently about Tik Tok’s collection and sharing of personal data, including sensitive biometric information.” Wait for it…

“eSafety is concerned that the capture of this sensitive data can put children and other vulnerable communities in social, psychological and even physical harm if such data is not properly collected, secured and protected.”

If you can put down your racism for a minute, how do imagine age verification tech will work?

eSafety’s preferred regulatory approach seems to boil down to “it’s okay when we do it”.

eSafety has also noticed ACCC’s “dark patterns” enforcement plans and would like some of that action.

Let’s leave it there.