In this series of posts, I’ll explain how we used a combination of NGINX reverse proxies, Docker, Traefik and a bunch of containers and virtual machines to build a lab that servers multiple websites over the same external IP.

I’ve been working on some internal projects lately that have required me to wrestle with containers and network proxying to get various things working. While I could have left it to others to deal with, I wanted to spend some time in the trenches to learn more about how modern tech stacks work.

Turns out, cloud native stuff is really complicated, and it’s quite challenging to set up and operate without a lot of knowledge about how all the pieces fit together.

The documentation is also frequently opaque and confusing for people not working with it every day. I’m pretty familiar with a lot of the base concepts, but I still found it heavy going. This post series is a way to try to make figuring this stuff out easier, particularly when I need to remember what I did in six months time.

Overview

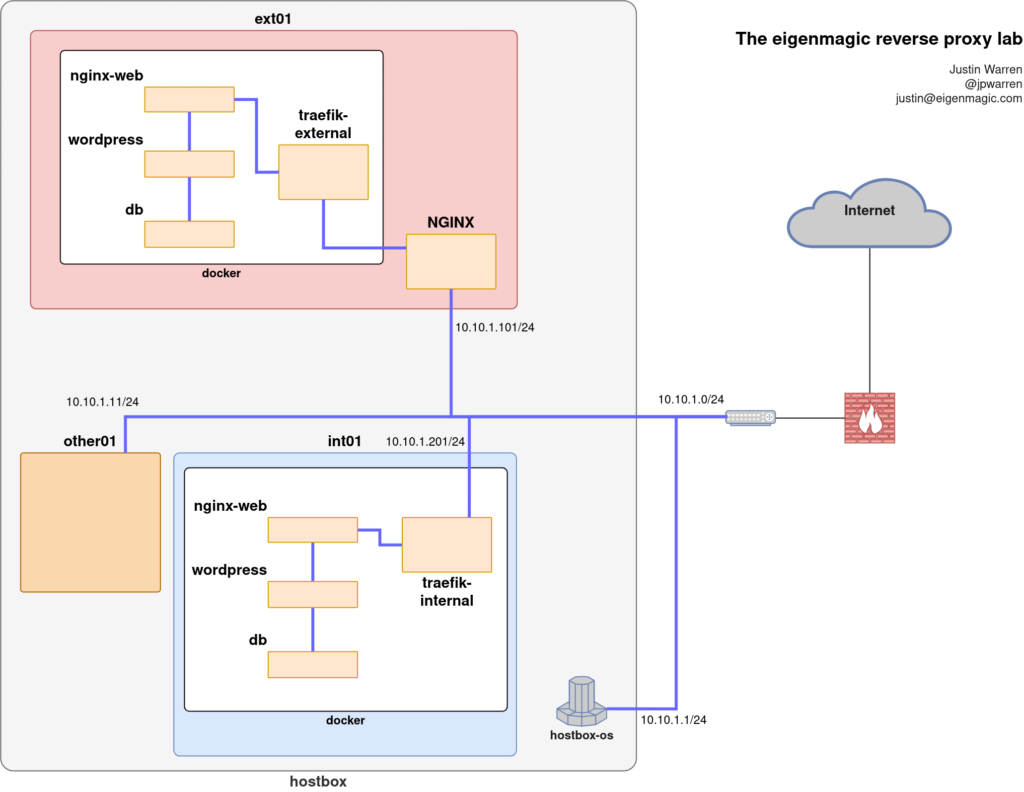

Here’s a quick sketch of our end state so you can see what the mess looks like at a high level once it was all working.

An overview diagram of the reverse proxy lab setup I ended up with.

The overall goal was to serve a variety of websites via a single frontend IP that’s public to the Internet, with traffic heavily filtered by a firewall that only allows a few select ports through. The ones we care about here are ports 80 and 443 for web traffic. Port 80 is only allowed because a few clients still attempt to contact websites on port 80 first and then get redirected to the secured port, and if port 80 isn’t there they fall over in a heap because secure comms is too hard.

We use the name of the site to figure out where traffic should go, and this means DNS is involved, so we already know we’re in for a bad time, but it gets worse. Much worse. As you’ll see shortly.

We’re also doing lots of TLS (because encryption is good and we need more of it, not less, you idiotic natsec muppets) so we need services that support Server Name Indication (SNI) information. This used to be a lot harder than it is now that most of the common tools support SNI well. I’m old enough to remember when Virtual Hosts were a big deal, let alone TLS.

Let’s work our way through the stack to see how this is set up and some of the choices we made and why.

The physical parts

There are very few physical components here because this is a lab. The firewall is a Ubiquiti UniFi Security Gateway (USG) and there’s a single server (hostbox) with an 8 CPU Intel i7-7700 @ 3.6GHz, 32GB RAM, and some flash and spinning disk. In between them is a Ubiquiti 8 port PoE switch.

The USG and the server are connected to the switch via CAT-6 UTP for 1 GigE networking.

And that’s it! Everything else is done in software.

There’s other stuff in the lab, but none of that matters for what we’re doing here.

The server is running Ubuntu 20.04 LTS and has some services running in the base OS for a variety of reasons unrelated to the lab work we’re doing here, but some of the services, such as the lab DNS, will become important later.

The virtual machines

I also like using VMs for certain things because they force the physical network to be a bit more important than if you’re running everything on containers on your laptop. That helps when you need to take something from your lab into production where you can’t simple share a filesystem or communicate between processes over a unix port.

The main function of the OS is to run Virtualbox to give us a couple of virtual machines. We use Virtualbox here because, at least the last time I checked, it was the easiest way to share the physical interface of the host with the virtual machines so that they can all have IP addresses on the same /24 subnet. The last time I tried this with KVM I couldn’t figure out how to get an IP assigned to the physical host and both of the VMs at the same time and have them all able to communicate with each other as if they were separate physical servers. If you know how to do it, let me know, because I’d really like to not use Virtualbox at all in the lab.

We have three virtual machines: ext01 which is where externally facing things run, and int01 which is where internally facing things run. There’s also other01 which is for a separate project but we need to play nicely with it or that project will be mad at us.

The lab subnet in this example will be 10.10.1.0/24.

The routing challenge

The broad routing challenge is:

- Traffic for a site appears on the public, Internet routeable IP

- The USG will port-forward anything arriving on port 80 or 443 to the IP of ext01. For this writeup we’ll call it 10.10.1.101/24

- ext01 will do a 301 redirect for everything appearing on port 80 to HTTPS on port 443, forcing TLS for everything trying to come in.

- ext01 will look at the traffic on port 443 and route it based on the SNI host the traffic is for.

- Anything for

playnice.eigenmagic.netis routed to other01 on port 443. - Anything for

webification.eigenmagic.netis routed to a local web stack onext01running as docker containers. - Anything for

project-eschatron.eigenmagic.netis routed toint01which is running another set of docker containers.

- Anything for

That’s basically it.

In the next post, we’ll dig into how we get all these different components working with each other.