This post continues on from the first post in this series on setting up a reverse proxy lab. Read the first post here.

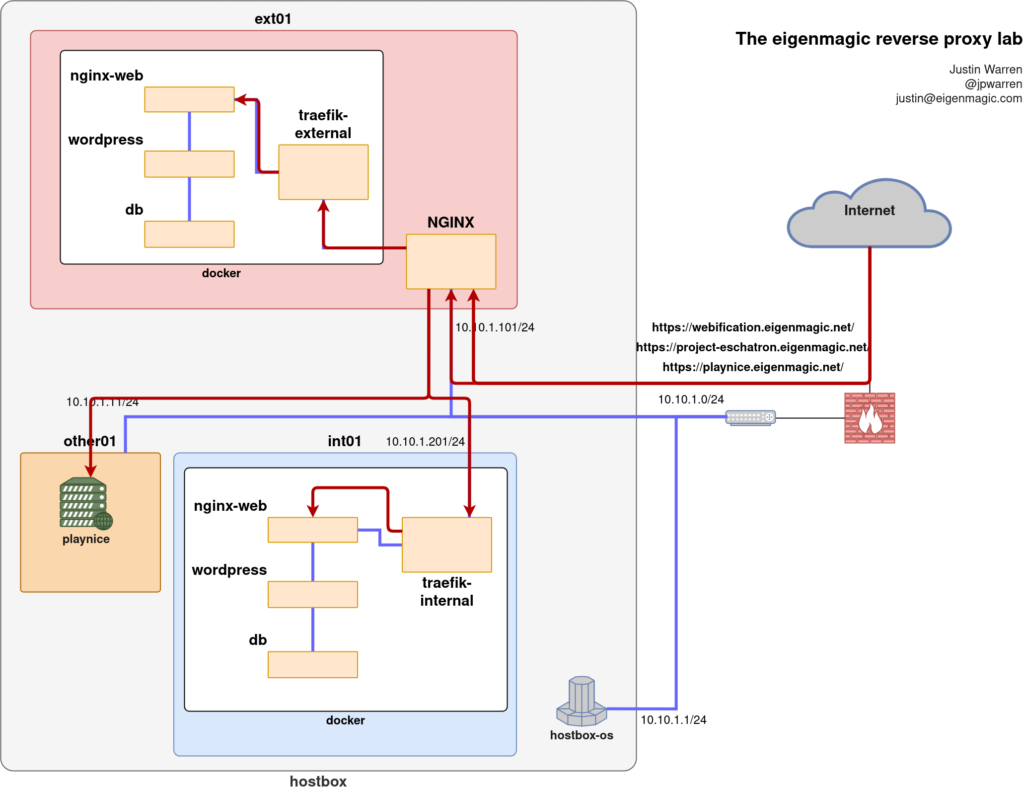

When we first started this project, we had an existing project (playnice.eigenmagic.net) sitting behind an NGINX reverse-proxy on ext01, so we needed to keep that working while we added the docker web stack to ext01. But that meant the docker web stack would need to use different ports to port 80 and 443 so that the existing traffic via the proxy kept flowing.

And it also meant that we’d need the NGINX proxy to be able to tell the difference between existing traffic for playnice and the new traffic for our new sites.

But how?

We tried using Traefik and HAProxy, but ultimately gave up and used NGINX. I’ll explain what we tried and why we gave up first, but if you don’t care, just skip ahead to the part about how we got NGINX to do what we wanted.

Trying Traefik

We were already using Traefik as an ingress proxy on another Docker stack, so we wondered if we could just switch everything over to Traefik here as well, since the only work the ext01 proxy was doing was, well, directly traffic. Why not use Traefik to direct traffic?

Because it’s really hard and we couldn’t figure it out is why.

What we tried

We were already running Traefik in a container, using the Docker provider and labels to autodetect new containers that started and wanted to have traffic fed to them by Traefik.

Here’s the main part of our docker-compose.yml config:

services:

traefik:

# The official Traefik docker image

image: traefik:latest

command:

# Enables the web UI

- "--api.dashboard=true"

- "--accessLog.filepath=/logs/traefik.log"

# Listen to Docker

- "--providers.docker=true"

# Don't route to containers unless they ask us to

- "--providers.docker.exposedbydefault=false"

# Parse config in the /config/ volume as well, watching for changes

- "--providers.file.directory=/config/"

- "--providers.file.watch=true"

# These are internal docker networking ports, not the ones that get

# exposed outside of docker. Connect them to the outside world

# with the ports: and expose: sections

- "--entrypoints.web.address=:80"

- "--entrypoints.websecure.address=:443"

# Make all HTTP switch to HTTPS

- "--entrypoints.web.http.redirections.entryPoint.to=websecure"

# Secure admin interface

- "--entrypoints.adminsecure.address=:4443"

labels:

# Enable the Traefik dashboard, securely on a specific hostname

traefik.enable: "true"

traefik.http.routers.traefik.rule: "Host(`traefik-external.eigenmagic.net`)"

traefik.http.routers.traefik.entrypoints: websecure

traefik.http.routers.traefik.service: "api@internal"

traefik.http.routers.traefik.tls: "true"

traefik.http.services.traefik.loadbalancer.server.port: "4443"

# Only allow admin access from a specific host

traefik.http.routers.traefik.middlewares: "traefik-auth"

traefik.http.middlewares.traefik-auth.ipwhitelist.sourcerange: "10.10.1.2/32"

# Map external ports to internal docker ports

ports:

# The HTTP port

- "8080:80"

# HTTPS port

- "8443:443"

expose:

# The Traefik Web UI externally

- "4443"

volumes:

# So that Traefik can listen to the Docker events

- /var/run/docker.sock:/var/run/docker.sock:ro

- ${APPVOLBASE}/letsencrypt:/letsencrypt

- ${APPVOLBASE}/traefik-ext:/config

- ${APPVOLBASE}/traefik-ext/logs:/logs

networks:

- proxy

restart: ${RESTART_POLICY}

Then you can add a container to be routed by Traefik dynamically by adding labels to containers, like this:

labels:

traefik.enable: "true"

traefik.http.routers.pihole.rule: "Host(`pihole.eigenmagic.net`)"

# Add web interface

traefik.http.routers.pihole.entrypoints: "websecure"

traefik.http.routers.pihole.tls: "true"

traefik.http.services.pihole.loadbalancer.server.port: "80"

So, in theory, we figured we could just add a Host(`playnice.eigenmagic.net`) router rule connecting the websecure entrypoint to a Service that just forwarded the traffic to the actual server.

Well, no, because Traefik actually terminates the TLs connection and then proxies the connection to the backend system, and we didn’t want to have separate certificates in the routed path. We just wanted Traefik to notice the name of the destination Host in the headers and forward traffic to that.

If you have a greater level of integration between the layers, you can terminate external names on the Traefik proxy host, and then do whatever you like internally, even connecting without TLS if you don’t mind adversaries being about the watch all of your internal traffic if they happen to get inside somehow (*cough*NSA PRISM*cough*). But we didn’t want to get all up in the other project’s business and have to coordinate what we were doing with them.

We tried using a TCP load-balancer service configured with PROXY protocol support, but this doesn’t seem to pass-through the connection unimpeded. It makes a new connection from Traefik to the destination host and proxies the content of whatever comes though, so you’re actually intercepting the traffic stream, which is not what we wanted.

What we really wanted was to just look at the SNI headers in the TLS traffic and make decisions based on the Host information there, but otherwise leave the TLS data stream alone.

After a couple of hours of frustration, we gave up and went looking for another solution.

Terminating Traefik

Traefik has a very pretty dashboard, and it feels kinda magical the way it can automatically detect new containers and plug them into itself dynamically using labels. When it works, it’s great.

But.

I find the documentation for Traefik really opaque, and I’ve got plenty of background in networking and web stacks built up since the web was in its infancy. There’s some reference material on how certain features work in isolation, but it’s not especially comprehensive. Figuring out how to join the different parts together to do a thing feels like a lot of work, and it’s unclear if you’re on the right track or not.

In theory, it should be fairly straightforward. Conceptually, the way Traefik uses modular components like entrypoints, routers, services, and providers seems like a good approach. But whenever I try to actually wire them all together using the Traefik syntax, I’m never sure if what I think I’m asking it to do is what it’ll actually do.

I can’t figure out how to debug Traefik in the kind of detail I’m used to with NGINX. I can’t work out how to get it to spit out debug logs, or log when connections come in, but fail for some misconfiguration reason. Using Traefik just feels hard. Harder than it should be.

I always feel like I’m not smart enough to use Traefik, and if I can get something to work, it feels like an accident and not something I actually understand. I suspect that if you use it a lot, and every day, it might start to make more sense and you’ll be able to debug things more quickly. But I don’t have that kind of time.

Trying HAProxy

We looked at HAProxy because, well, it has the name Proxy right there on the tin, so it should be able to proxy our traffic for us, right?

Having never used HAProxy before, we fumbled about a bit trying to figure out how to set up a backend to talk to the webserver for playnice.eigenmagic.net but without success. We tried a few variations of TCP mode and HTTP mode, various frontend/backend setups, but couldn’t manage to successfully make the connections work all the way through from client->proxy->server.

We had some partial successes, such as getting traffic to detect SNI names and push a connection through, but we also got some new (to us) errors about backends being offline because Traefik wasn’t detecting them in the way it expected.

We gave up fairly quickly because it became clear we just didn’t know enough about how HAProxy views the world to understand its configuration nuances, and what we were trying to do was a bit complex (TLS is a bit like that). This is no slight on HAProxy, and entirely on us.

While writing this up, I had another quick look around and found this old blog from HAProxy themselves about load balancing with SNI which looks like just what we needed. Perhaps if we hadn’t spent as much time on trying to figure out how to get Traefik working we’d have been able to get HAProxy working.

Ah well. Hopefully it helps someone else.

Back to NGINX

After a frustrating few hours, we ended up going back to what we felt we knew and were confident would provide us with a working solution: NGINX.

The old, complex way

We’d previously used NGINX to proxy sites using a fairly complicated setup a bit like this:

# Upgrade HTTP requests when asked

map $http_upgrade $connection_upgrade {

default upgrade;

'' close;

}

# The backend server to serve traffic

upstream backend {

server 10.10.1.11:443;

keepalive 32;

}

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=proxy_cache:10m max_size=3g inactive=120m use_temp_path=off;

server {

listen 80;

listen [::]:80;

server_name playnice.eigenmagic.net;

error_log /var/log/nginx/playnice.errors.log warn;

access_log /var/log/nginx/playnice.access.log combined;

root /var/www/playnice;

# Redirect everything to HTTPS

location / { return 301 https://playnice.eigenmagic.net$request_uri; }

}

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name playnice.eigenmagic.net;

root /var/www/playnice;

error_log /var/log/nginx/playnice.errors.log warn;

access_log /var/log/nginx/playnice.access.log combined;

# Wildcard TLS cert for the lab

ssl_certificate /etc/letsencrypt/live/eigenmagic.net/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/eigenmagic.net/privkey.pem;

ssl_protocols TLSv1.3;

ssl_ciphers HIGH:!MEDIUM:!LOW:!aNULL:!NULL:!SHA;

ssl_prefer_server_ciphers on;

ssl_session_cache shared:SSL:10m;

gzip on;

gzip_disable "msie6";

gzip_vary on;

gzip_proxied any;

gzip_comp_level 6;

gzip_buffers 16 8k;

gzip_http_version 1.1;

gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

add_header Strict-Transport-Security "max-age=31536000";

# Forward everything to the backend server

location / {

client_max_body_size 50M;

proxy_set_header Connection "";

# These headers are important to clue in the remote server

# about the request we're proxying from the client

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Frame-Options SAMEORIGIN;

proxy_buffers 256 16k;

proxy_buffer_size 16k;

proxy_read_timeout 600s;

proxy_cache proxy_cache;

proxy_cache_revalidate on;

proxy_cache_min_uses 2;

proxy_cache_use_stale timeout;

proxy_cache_lock on;

# Where to proxy to, using TLS

proxy_pass https://backend;

proxy_ssl_name $host;

proxy_ssl_server_name on;

proxy_ssl_protocols TLSv1.3;

proxy_ssl_session_reuse off;

}

}

This listens for connections to the server by name, and proxies them onward to the remote host, using TLS and setting various headers to match what the remote server would expect from a client connecting directly, and then passing the information back to the client. It works, but this is a lot of config file to add what is basically a name->server mapping, and we wanted to be able to do more of these.

Is there a better way?

Yes, it turns out there is. The ngx_stream_ssl_module does what we need.

You add the configuration to the http block, which on our Ubuntu system means adding a config file into /etc/nginx/modules-enabled/

stream {

map $ssl_preread_server_name $name {

playnice.eigenmagic.net playnice_backend;

webification.eigenmagic.net webification_backend;

project-eschatron.eigenmagic.net eschatron_backend;

}

upstream playnice_backend {

server 10.10.1.11:443;

}

upstream webification_backend {

server 10.10.1.101:8443;

}

upstream eschatron_backend {

server 10.10.1.201:443;

}

server {

listen 443;

proxy_pass $name;

proxy_protocol on;

ssl_preread on;

}

log_format basic '$remote_addr [$time_local] '

'$protocol $status $bytes_sent $bytes_received '

'$session_time "$upstream_addr" '

'"$upstream_bytes_sent" "$upstream_bytes_received" "$upstream_connect_time"';

access_log /var/log/nginx/stream.access.log basic;

error_log /var/log/nginx/stream.error.log;

}

This sets up a stream proxy that maps the SNI name to a backend system, and then the server listens on port 443 for these names and then proxies the traffic to the backend system as a proxy stream, using the PROXY protocol (proxy_protocol on) to pass through a bunch of the information like the actual client IP and other things we had to use headers to set above.

There is one change required on the backend servers: they have to have the PROXY protocol enabled on the server listener, which ours do. Otherwise you’ll get TLS errors because the server is expecting a different protocol and won’t know how to deal with the PROXY connection setup.

Connecting the pieces

In the next post, I’ll explain more about how the whole setup works, and some of the nuances of setting up traefik to listen on the right ports when co-hosted on the same system that is running the main NGINX gateway proxy that is doing all this traffic direction.