I’ve had to write this email multiple times now, so, taking my own advice, I’ll write it as a blog and then I can just send the link to people. As can you!

Vendors, particularly storage vendors, like to say Quality of Service when they mean priority throttling, and they are quite different things. I contend that real QoS requires hard-settable upper and lower limits for resources in your system if you want to be able to legitimately call it Quality of Service and not throttling.

How Priority Throttling Works

Many vendors use QoS to mean that you can assign different priorities to a workload, as service tiers. The ‘precious metals’ scale is often used, so you have Platinum for the best service level, Gold for the next one down, Silver under than, and finally Bronze.

The idea is that your absolute mission critical workloads get Platinum (the highest service level) and stuff that is least important gets Bronze.

An example set of service levels.

Platinum is more expensive than gold, so this service tier is meant to be more expensive. It usually costs more to provide in some way. Perhaps the service response time is faster, say, within two hours instead of six hours for gold. Maybe it’s the performance, in IOPS or bandwidth.

This is usually a minimum guarantee. A customer chooses Gold because they don’t want less than, say, 3000 IOPS at 8kB blocks. Or maybe a 1 Gbit/s network link. The maximum will depend on the implementation, and this is where the trouble begins.

Empty is Better

When you first commission this sort of infrastructure, it’s empty. There is no contention for the resources. There’s no one else to clog up the WiFi channel, or sharing the Coax, or running data over the same uplinks. You might have only paid for Silver service, but you can quite happily get Gold or even Platinum.

In a priority throttling situation, people who bought Silver can happily enjoy a higher level of service because there aren’t many Gold people on the system, and there’s plenty of capacity to go around.

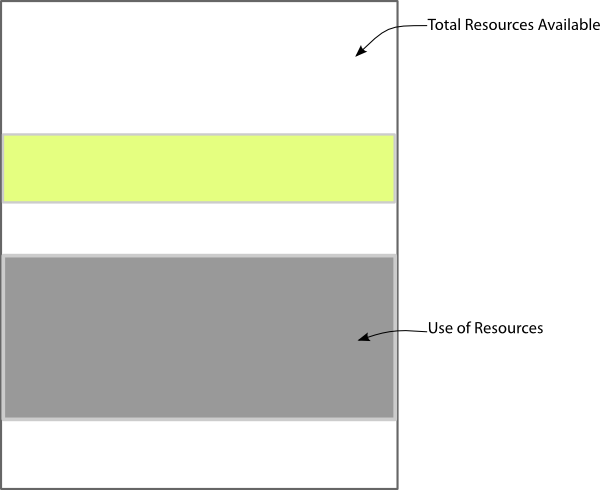

Use of resources in a mostly empty system.

There’s no contention, so the service tiers don’t really have any effect. People are free to use up to the maximum resources they are able to. In this case, we have someone who bought Silver using a lot more resources than someone who bought Gold. But, neither is adversely impacted, so the minimum required resources are available to both.

The problem only kicks in when the system starts to get full. Now we have several Gold customers who can’t fit if the Silver customer keeps using the same amount, let alone a Platinum and Bronze customer. We have contention for limited resources. So what to do?

Resource contention on a full system.

The priority kind of QoS uses a priority-weighting algorithm of some sort to figure out how much of the total resources should be allocated to each of the workloads waiting for it. And this is where your allocation of “workload priority” to service tier actually takes effect. The theory is that, in order to provide the required minimum resources to the Platinum workload, the Gold workloads should suffer a bit, the Silver workloads will suffer more, and the Bronze the most. Hopefully there is enough capacity to cater for everyone’s minimum, because if there isn’t, then what happens next depends on the algorithm.

Some algorithms will kick Bronze off the system entirely. Others will still provide some barest of minimum resources, but Bronze will still suffer a lot more than Silver or Gold.

This solution is very common, and it’s based on engineers thinking about how to cater for all the different workloads “fairly”. There’s an assumption that all the workloads are essentially neutral. They’re just computer programs running on the system, after all.

But the reasons for running those workloads are not neutral. They’re driven by people, who are not rational economic actors with access to perfect information. Far from it.

The User Experience

Consider now the user experience of this sort of system. On day one, you get amazing performance from the shiny new system. You crow about how awesome the new hardware/software/vendor is. You compare it to the old system it replaced (which was older technology, had been in operation for years, and was, of course, perfectly maintained and operated) and cry from the rooftops how much better your life is now. There may be cake.

On day 190, when the dev team run a big data load, your performance suffers a bit because you’ve been using more resources than you originally specified. Maybe you underestimated, or maybe you just started to do more with the lovely fast system you now had access to. And so cheap! Why not use it to its fullest? You file an incident report with the service desk, helpfully informing them that your system is “a bit slow”, and so beings the doom spiral that is modern IT.

On day 465 you can barely run your normal workloads (which consume 2x the original resource requirements, but the budget for new infrastructure will come through any day now, honest) and everyone on the platform is suffering. It simply wasn’t designed to handle the amount of workload that is in place, and the workload happened originally because people could and came to expect that level of resources as normal.

Because that’s what people do. They acclimate very quickly to changes in their salary level, benefits, commute times, ambient temperatures, and availability of decent coffee. People notice changes that feel relatively sudden, and don’t notice gradual ones. People experience losses more strongly than gains (it’s called loss-aversion, and is fascinating).

We’re Used to Pre-Throttling

We’ve been doing this kind of performance tiering for some time. Roll back the clock five to ten years, and gold service was the expensive, wide-striped, fast disk (FC attached 15k RPM drives, maybe flash or RAM accelerated on the bigger arrays). Silver was slower, but still adequate 10K RPM drives. Bronze was whatever we had left.

The thing about doing performance tiering this way is that it has inherent upper performance limits within the tier. There’s only so much throughput/IOPS within a grouping of HDDs in a RAID. If you hit that limit, and needed more, then you had to move your stuff to a higher tier, and, presumably, pay for the privilege. If all you could afford was Bronze, that’s what you got, and your ability to affect Platinum tier was limited (though not zero).

Imagine instead that you replace a system with in-built limits on its tiers with an all-flash array. Everyone gets flash! Awesome!

But is it?

The CPU in the platform is limited, so imagine the situation in the diagrams above as the array gets full. What happens? Same thing. Where the performance used to be amazing, now it sucks relative to the amazing performance I just got used to. It’s not a bad array, it’s the same one it always was. But now it feels crappy in comparison to yesterday.

All IT Departments are MSPs Now

Like it or not, the days of buying a full stack of dedicated hardware for a single workload are now, thankfully, pretty much over. There are exceptions (there are always exceptions) but the fact that hypervisors are so utterly mainstream now demonstrates that running virtualised workloads on a common pool of infrastructure is a thing that isn’t going away.

That means that all IT departments provide a service to the business units who run workloads on the infrastructure that they manage. That is, by definition, a managed service provider.

To do managed service provision well, you need to be able to provide your customers with the service they paid for. If I buy a 1 Gb/s link from a telco, I expect to be able to get 1Gb/s out of it whenever I want. If you want to thin-provision your network so that there might be contention at some point, that’s up to you, but I’ll rightly consider it a fault if I can’t get my 1Gb/s.

But I don’t expect to be able to get 10Gb/s from that same link unless I shell out a bunch of money for a higher limit. Imagine if I could buy a 1Gb/s link that actually gave me 10Gb/s for the first three months because there was no one else on it. What would you do? And then if, after three months, it suddenly dropped back to 1Gb/s max, how would you feel?

That’s why having a maximum resource usage level is important for a managed service provider. For a start, it keeps people’s expectations consistent with their experience, which is what’s actually important for good customer service. Trust is built on predictability.

Secondly, it means that if there is sufficient demand for resource usage, the people supplying it actually get paid for it. Giving it away for free is called charity, and even if you’re a charity, you still have to pay for power somehow because the utility companies definitely aren’t charities.

Vendors and QoS

If your gear only allows me to throttle people down when the gear gets full, I can’t do proper managed service provisioning with useful economic signals to my customers. I can’t function like an MSP.

I have to put in a lot more effort to build in something similar to my services that works around your kit. I have to be careful about how I manage expectations and resource usage. I have to be really careful about how I do budgeting and financing of new gear.

All those things are painful, and I really wish more vendors understood the version of QoS I’ve outlined here. To those who do, thank you, because you make it easier for me to recommend your gear to my clients.