PernixData FVP, in its upcoming version (currently in private beta) has some intriguing possibilities as part of an ecosystem of products, and it adds one of the big features I asked for in my prep post.

PernixData FVP, in its upcoming version (currently in private beta) has some intriguing possibilities as part of an ecosystem of products, and it adds one of the big features I asked for in my prep post.

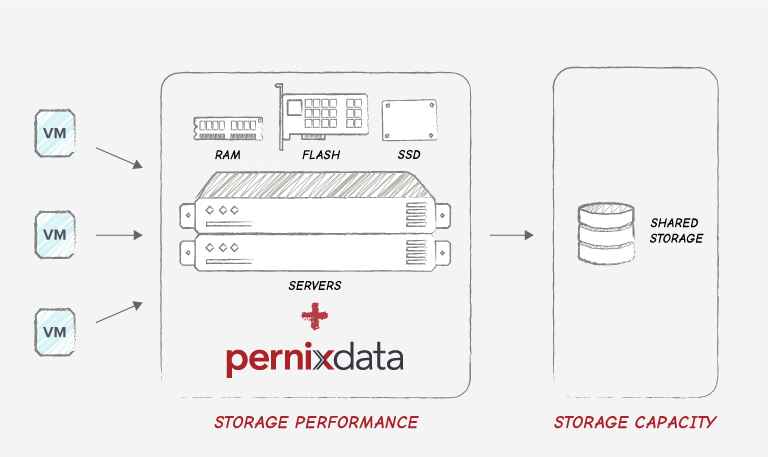

What I like most about FVP is its non-disruptive nature. Subject to a few caveats, you can deploy FVP into an existing vSphere cluster without having to reboot hosts, or reconfigure networks, or deploy additional VMs. It just slides into the kernel to provide a distributed cache for whichever datastores or VMs you want to accelerate.

My mind enjoys playing with obscure corner cases so I immediately thought: “Hey, what if you could plug in a flash appliance — like something from Violin Memory, Pure Storage, or SolidFire — and use that to accelerate workloads on network connected disk arrays?” It’s a bit insane, and I was expecting to hear “No, you can’t do that.” To my surprise, PernixData CTO Satyam Vaghani said that yes you could, subject to a few caveats, like having a good enough network, and the obviously higher latencies than local flash. I had to rewatch the video to clarify that this was what he actually said, but yes, apparently it’s possible. (See all the videos from Storage Field Day 5 here).

Why on Earth would you want to do that?

Better is Good Enough

Adding flash to an existing storage array is hard, and usually disruptive. It’s either a forklift upgrade of an array, or scheduled downtime to plug in new hardware. And then it’s limited to only storage on that array. FVP can be applied per-VM, so it’s a lot more flexible and granular. But putting extra memory or flash into your VM cluster is also disruptive. Dropping some standalone flash onto a network is pretty easy to do. FibreChannel is easy enough to zone in if you have some spare switch ports, and if FVP could use NFS attached flash, that’s easy to add to an existing network, too. Combined with the new compression and dedupe abilities of FVP, the network load isn’t likely to be overwhelming.

Now, if you have a cluster with storage performance issues, but without much spare capacity (RAM is always under pressure, right?), you could find some flash on another appliance somewhere in your datacentre and use that to accelerate workloads. It doesn’t have to be faster than server-side flash installed in the cluster, it just has to be better than the pool of SATA the data is currently on, and the network latency part of the data path is only a small percentage of the overall fetch time (seek times and rotational delays are several milliseconds for spinning disks, and mere microseconds for FC switch hops). I can think of a past client with misaligned VMs on NetApp SATA aggregates that could have really used this ability, particularly when some of the VMs got busy.

Choices

It also means that you have a lot of options if you’re thinking of deploying some flash into your datacentre. Rather than dedicate it all to the VM cluster by installing PCIe cards or flash drives in all your hosts, you could take a slice off a flash array for your acceleration pool and use the rest for a dedicated workload that needs flash. It doesn’t even have to be a virtualised workload.

The constant pressure to reduce costs, and naive decisions about how to do so, can lead to VMware clusters hitting resource constraints: lack of memory, no spare hosts, slow disk. Fixing them is a challenge, particularly if outages are required. After all, the cluster is busy because the VMs are being used, and simply turning a few off isn’t a great solution. Some IT environments get into trouble because of unfortunate decisions made in the past. Acknowledging the mistakes (and learning from them so you don’t make them again) is important, but so is fixing the immediate problem.

Being able to drop in some additional resources in a non-disruptive, possibly creative way, can help organisations get out of a bind. It can also prove the case for a more permanent deployment of acceleration or flash storage.

My SFD5 colleague Robert Novak has an interesting take on this idea that is worth a read.

What About Infinio?

This latest release of FVP will put pressure on Infinio. Infinio was aiming at a lower price point than FVP, so it’s possible each company can still target different market segments and succeed, but it’s hard to see Infinio surviving if PernixData move down-market with a cheaper version of FVP.

Previously, FVP only did block storage, while Infinio did NFS, so it was possible for them to peacefully coexist in the VMware market. The particular method used by Infinio did some funky network mucking about to redirect NFS traffic via the Infinio acceleration VM, and Infinio used spare RAM rather than flash for the cache pool. FVP intercepts the data path in the hypervisor kernel, rather than at the network protocol layer, which is how they’re able to intercept both NFS and block traffic now. FVP have also added RAM support as well as flash, so FVP now offers a superset of Infinio’s acceleration features.

Infinio can’t really move up-market without offering more features than they currently have, and I’d expect that the acceleration VM approach they’ve chosen would make that quite a challenge, certainly in the short term. I’ll be watching with interest to see how Infinio responds.

Pingback: Data acceleration, more than just a pretty flash device - frankdenneman.nl

Pingback: SFD5: Full Disclosure | eigenmagic