Diablo Technologies are a Canadian company who have developed flash that you can access like memory, called MCS: Memory Channel Storage. It’s like the inverse of a ramdisk, for those of us old enough to remember such things: put flash into your server, but access it over the memory channel.

The advantages for performance are pretty clear: the fastest possible way to access data from the CPU is if it’s already loaded in a register somewhere:

cmp ecx, edx

jne LABEL

There aren’t many registers, so you have to put data somewhere else: RAM or other storage. I/O to memory is at least one order of magnitude (often more) faster than I/O to even directly attached disk, let alone disk on the other end of a network cable somewhere.

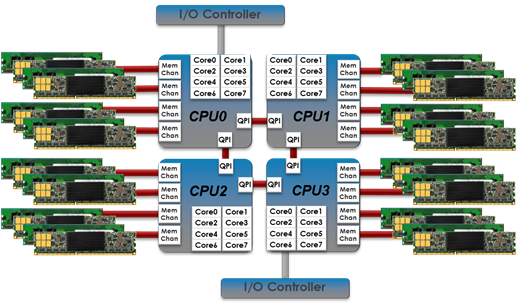

Plus, access to I/O goes over a data bus of some sort to talk to the CPU. The data bus is shared between many devices, and while some systems have more than one, they don’t have a dedicated bus for each I/O device, so you will get some sort of contention that has to be managed; only one thing can talk over the data bus at a time.

Diablo bypass that by plugging flash modules directly into the DDR3 DIMM slots in a server, making it look like memory. Well, not quite. There’s a mediating driver that manages the access to these speciality devices, and you need a UEFI/BIOS update, but they’re apparently functional on Windows, Linux and VMware, though I don’t know which specific OS versions are required.

Niche Problem

This is a solution for people who find flash too slow and memory too confining, and can’t afford to just buy loads of DRAM. You get faster access than flash over PCIe, and you get persistence not available with DRAM, and flash is cheaper than DRAM too. There aren’t many workloads that I’d imagine would require this sort of solution, depending on the price, but I can see situations where it would be useful: loading a lot of data into Hadoop for processing, for example, or a high-frequency trading application.

And really, why do we still have this weird bus-based access to I/O? I mean, with DMA controllers and memory-mapped I/O, we’re trying to access everything as if it was memory anyway. Why not just plug storage devices directly into memory?

And if we continue to see convergence of storage and compute, why bother with flash disks when you can just have flash memory? Leave the disk I/O for everything too slow for memory channel access and bring the data even closer to the CPU.

Weirdness

There is weirdness about this company, though. They were apparently founded in 2003, and their website doesn’t seem to have gotten a lot of love since then. There’s precious little in the Solutions section, for example. This is in spite of raising $36 million a bit over a year ago. It looks like the company has either been in stealth mode prior to 2012, or it’s just taken a long time to get this product designed and built.

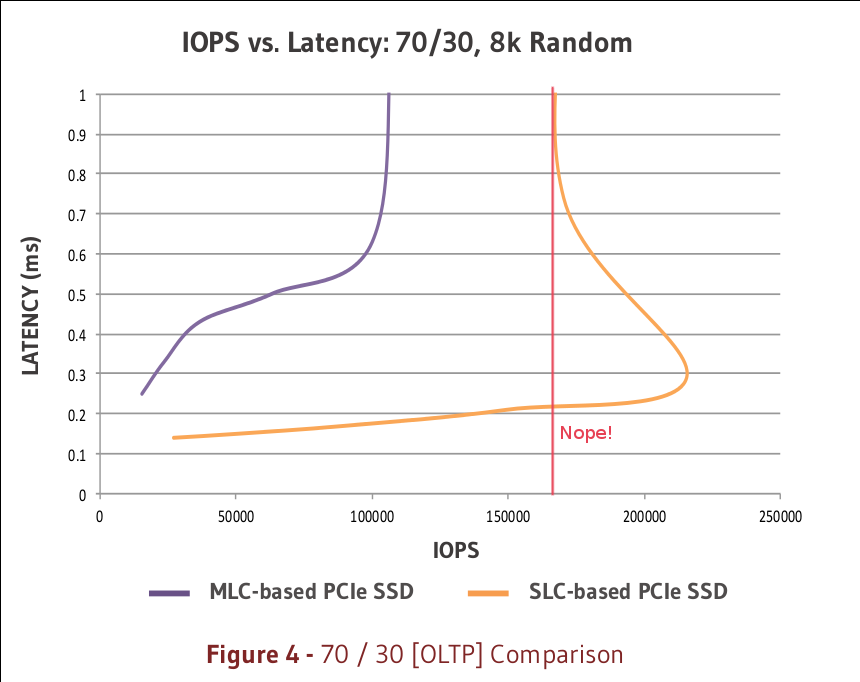

One other thing that really needs fixing is whatever happened to the charts in this whitepaper [PDF]. Here’s the most egregious example:

This chart seems to suggest that you get two different latencies when you go above about 160,000 IOPS. That implies that the relationship between latency and IOPS is a higher-order polynomial (quadratic or higher) where y has more than one solution for certain x values. ORLY? That would be a very surprising result. Show me the data, please, and how you managed to get it.

I reckon it’s more likely to be an error caused by an overly-enthusiastic marketing person who discovered Bezier curves in Illustrator.

But I’m happy to be corrected either way.

Update!

Diablo got in touch with me, and said that the charts look like this because there’s a third variable that isn’t shown: effective queue depth:

The plots show data collected at increasing effective Queue Depths. Eventually, increasing the eff QD will have diminishing IOPS returns while increasing latency. As the eff QD increases, it’s not unusual (as the data indicates) to see IOPS degrade at some point… likely as a result of tuning to maximize SSD performance at an eff QD “sweet spot”

These plots are, therefore, 2-dimensional projections of a 3-dimensional curve. Actually, it’s a bit worse than that: it’s a plot of 2 dependent variables with the independent variable left off.

Boo.

More Info

This little chart whoopsie aside, Diablo have apparently partnered with Sandisk to create this MCS stuff, with Sandisk doing the hardware and Diablo the software, more-or-less. The injection of funds, and a few key hires, indicate that Diablo is starting to ramp up their marketing and start to get the word out about what their MCS product can do.

I expect that Storage Field Day is part of that effort, so I look forward to hearing more from Diablo about where they are in the product development cycle, and what their go-to-market strategy is.

As an interesting aside, Michael Cornwall from Pure Storage is on the Technical Advisory Board for Diablo Technologies.

Pingback: Thoughts from SFD5 - Using Flash DIMMs for Server-Side Storage | Wahl Network